Web Audio Conference, Berlin 2018

Web audio, what is it? Besides occasional blips and blops coming from your browser. In games you obviously have to deal with it. What about music? Not just listening. Editing. Creating. Collaborating.

Now we’re talking.

I’m actually not sure how html game developers handle the audio. Is html5 audio enough or do they need more? I never been that much in to the gaming and I thank my lucky starts for it. World is an avalanche of distractions enough nowadays. But music it is a different story. I’m kind of a musician / developer and I like to create stuff. Recently as a side project I’ve been developing this audio related web app for remixing / rearranging songs generally speaking*.

Browsers are increasingly more the main platform where we use our apps. It is a platform that constantly becomes more and more powerful. And of course all the different browsers from different companies implementing all those APIs are a challenge. Or a fucking mess as we web developers say.

So what are the possibilities you can achieve in browsers concerning audio? For clarifying that, I decided to participate in Web Audio Conference in Berlin.

disclosure: I’m also a horrible procrastinator as far as my side projects are concerned.

1. my(music).generation(machine);

Berlin offered also a little extension for summer, leaving home at +7 C to +27 C. That made the atmosphere in lecture room in Technical University bit sweaty too.

Discussing your favorite audio formats and combine that with laser cutting. That was a good way to keynote the conference going by Ruth John from live.js.

I did not know there is also a Web Gamepad API. But now I do, thanks to Lisa Passing. She used this game boy controller (or something. Remember I don’t know anything about gaming) to use her party-4-2 app.

My generation. When I was actively in music scene, internet was just on it’s way. Computers and audio? Did not know. Up here in north music was playing metal or noisy rock and looking grumpy in pics. We made a few albums, analog recording (take that, hipsters). In mid 90’s I discovered computers and digital audio. And first sequencer, Cakewalk.

That was light years ago. Oh man. Old man, yelling at the cloud…

Music.generation.run();

You can really generate music based on anything. Just set a rule set of some kind. Like from a web site’s live user data. Nick Violi did just that. Visualized it with note patterns. I immediately thought that this is what critical log monitoring should be like in banking world. Minority Report but listening nice tunes instead of seeing murders. You could perhaps recognize critical elements in application’s load managing in observing changes in background music, let alone errors (psycho type violins there, obviously). Presuming you haven’t lost your mind after listening logging composed tunes all day long, day after day.

I’ve been following Tero Parviainen on Twitter for a while now. I think I sometimes saw his name in some Angular related tweets and that did not make me click the ‘follow’ button yet, but his experiments with generating music with AI (magenta) that did it.

It is funny how you feel like a some sort of a stalker when you finally meet someone you have been following and have a chat IRL. But I guess that is ok since other one has volunteered to that. Tero talked about his experiments with Tensorflow based machine learning libs magenta and MusicVae and showed hist latest one https://incredible-spinners.glitch.me/. That is really hypnotizing. Even if you are not into web audio, you should really follow him.

Playsound.space is a sort of a search engine / player for common license sound clips . Sound clips are fetched through Freesound API and are visualized with spectrogram imaging. Don’t let the minimalistic rough UI distract you. When Ariane Stolfi showed few basic functions shown in the presentation, it made me really interested in this app, and later at the concert this was even clearer: playsound.space is a powerful instrument in itself you can create a lot of crazy stuff with it.

Apparently you can mix ruby, javascript and Ableton Live as Hilke Ros demonstrated. I personally have not used Ableton Live yet, but I got the feeling at the conference it may be good choice (in commercial DAWs) if you want to experiment with music in the web also.

I think I have spotted this earlier on twitter, but it was fun to hear a walkthrough of the solving this ‘problem’ of Mathieu Henri’s “music for tiny airports”

“It’s a niche”

Yep, that’s the word I was looking for. And that was what Tero said at the informal hangout session in a beerstube close to the venue. And of course it is. The web audio scene yet consists of relatively small amount of people. Comparing to, say like React community. Or any major javascript library/framework.

There are upsides in this. If you would go to React conference for 3 days including workshops, I’d say you would pay at least three times as much for the tickets as I did for my early bird tickets. And this is the kind of conference many are paying their own fares, not their employer. Like me for instance. So, affordable tickets: very good. And the community is more close knit. No ‘how to handle the state’ wars there yet.

Abstractions, sweet abstractions!

Tone.js is used to abstract the harder things to make it easier to use WAA. Lot of presenters in conference mentioned using tone.js. Seems smart. Concentrate on essential stuff instead of building everything from scratch.

Same thing with magenta.js. The aforementioned music (and image) related stuff you can use AI. That lowers the barrier for starting to experiment with it. Without knowing what all the scary looking cow skulls in mathematical patterns do.

Visualizing audio

That can be artistic. Or scientific. Or educational. Visualizing sound waves with tools like fav.js. Picking chords from UI to match songs in purpose of learning chords -> Jam with Jamendo. And then there is cables. Visual programming tool to make flashy demos for Web GL and Web Audioby just connections pieces on the screen.

Web Audio Concert

You don’t often hear in concerts for a start that audience should unmute their smartphones and put the volume to the max. All the way up to eleven. But at the basement of Soundcloud’s Factory in Berlin we did.

String trio with bunch of noisy collaboration from audio phones, that certainly was something new. As I already mentioned, seeing playsound.space in action was pretty impressive. Is sort of a audio installation way this time.

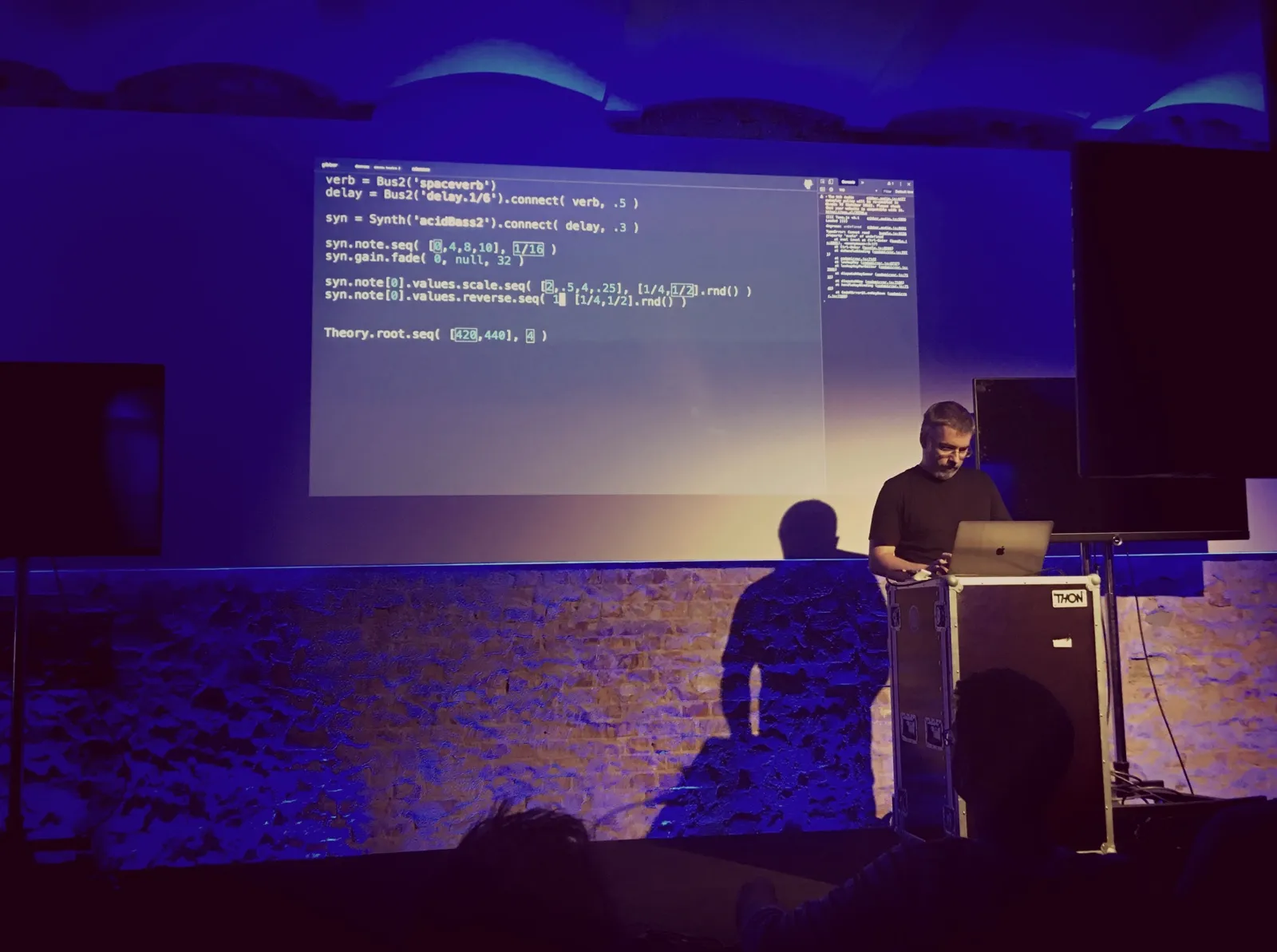

Charles Roberts, who gave a presentation using gibber.js during the day, dropped the funkiest beats of that evening. By live coding with gibber he created these amazing beats and synth patterns. Wow.

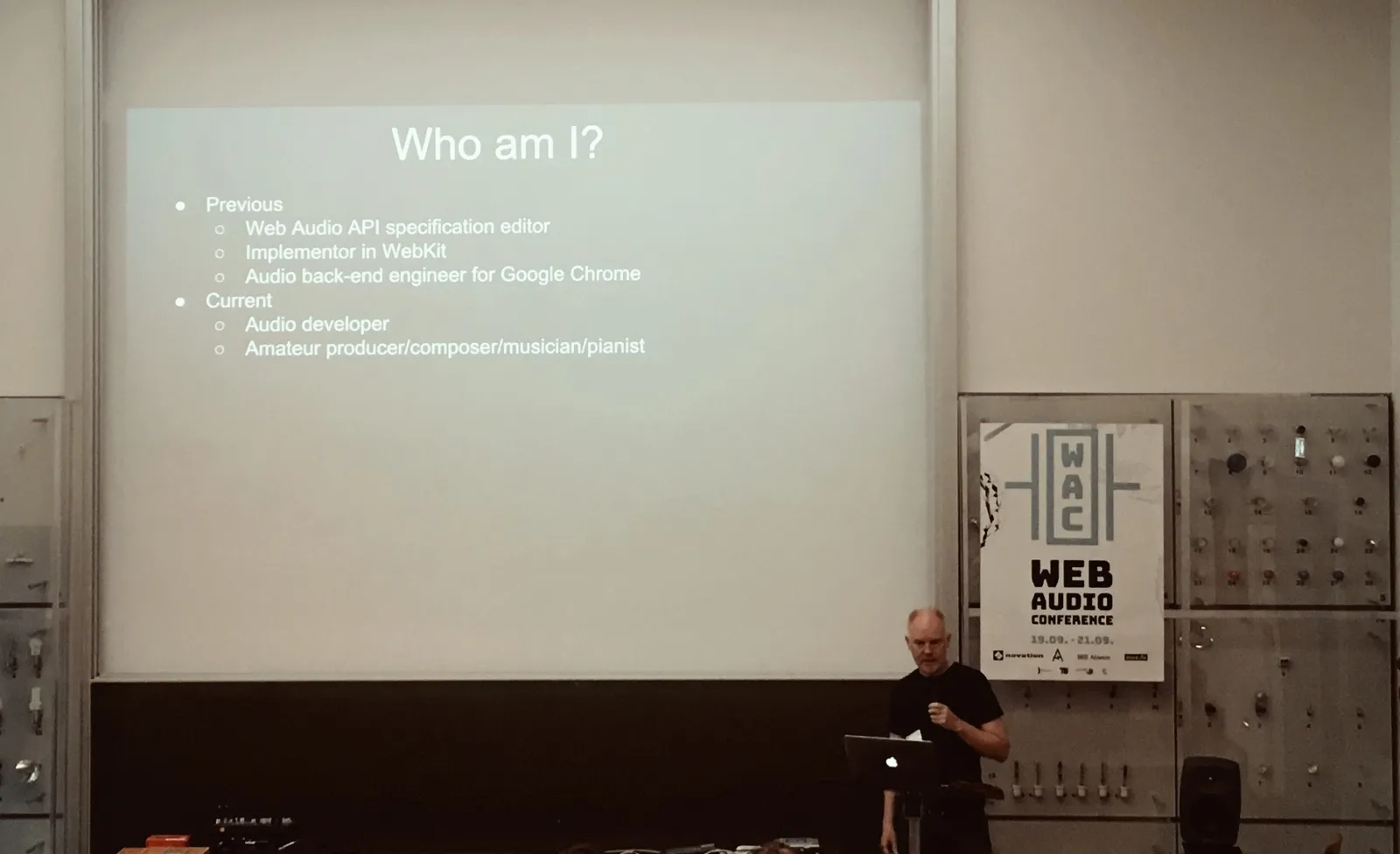

2. Memory lane

The creator of Web Audio Api himself Chris Rogers started the second day. So we all heard how this all got started. And it started as a google skunkworks (20% of employee’s time, freely allocated) project. Just in his own machine, without source control at first. So at some point, it took some convincing to be allowed to carry on with that work.

Fortunately Apple got interested in his work. Which gave some leverage. When Google forked Apple’s webkit to make it’s own version Blink, then that naturally made the work harder. That is because Chris had to merge his changes to Google’s fork of webkit into the Apples version from that point on.

Eventually Web Audio Api’s first implementation was finished and first draft was creted for the W3C specs. I did not know that, but as prerequisite Chris Rogers also had to implement support for fetching binary data with XmlHTTPRequest because it was missing in 2010!

He left Google in 2013, after the Web Audio Api was successfully delivered to all of us. He then has focused on making music and writing his own software for that. For a guy who has made this conference possible to exist he really seemed a down to earth, modest guy. It was great to hear his stories also unofficially sort of speak. Interesting career. Certainly one that can be said to have made the difference.

By the way, you can listen his beats at SoundCloud

Let me assemble that audio for you

When a guitar is plugged into a computer, you can expect something from a presentation. Michel Buffa let it rock, when it was time to dive deep into Digital Signal Processing. You can wire together quite nice bunch of Web Audio Plugins nowadays. And actually use them in other software Michel Buffa on Twitter: “#wac2018 WebAudio Plugins running on the soundtrap DAW, #webaudio. What à day !… “. You can test some plugins here: Plugin tester. Standardization of web audio plugins is in a process.

iPlug2 is a desktop framework for building plugins in C++. It has now been extended to allow the same code to be used to create Web Audio Modules, browser-based audio effects and instruments, using WebAssembly. This is a major step in making browsers credible audio tools.

Jari Kleimola has done a lot of work with these things and results are pretty amazing. And a truly a ‘see if you don’t believe’ case is of course a Propellerheads Europa! Which you can now try in your browser.

Sound delivery date(), always late?

Latency and timing. These are things you are concerned with even in pains native audio apps. Couple of talks issued with this. Walker Henderson has experimented with overcoming the latency issues in his looper app. I liked his approach and I look forward to try this stuff. Christoph Guttandin talked about existing library for timing issues between multiple web clients, Timing Object.

Collaborator!

So there are some really cool multi trackers in web, mimicking native DAWs. Some of which are looking pretty damn impressive, like Amped Studio and Soundtrap. What are these good for and to whom are they aimed to? I suppose if you already use some native software like Logic, Cubase or Pro Tools, you are not going to be moving to web apps from them.

But. If you’re are looking something easier (and cheaper) to start with laying out some tracks. This is something to consider. And most importantly, even for more software-wise advanced musicians, I think for collaborating these are excellent tools. For sharing and developing song ideas. With anyone anywhere.

Other kind of collaborations were seen. At EarSketch you do it by coding. And at Multi Web Audio Sequencer you can choose a room and create beats simultaneously with other people. Thanks Xavier for demoing this. Again, so cool!

3. Back to the future

Paul Adenot from Mozilla gave some insights about future of Web Audio and Web Audio Worklets in Mozilla. Paul is a member of audio working group in W3C and been working on Web Audio Api. Which got it’s status as CR (Candidate Recommendation) just day before the conference started. Mozilla’s implementation for Audio Worklets will be shipping soon. So good news there too.

By the way, if you want to see unit test set for web audio api changes, here they are. Run against every pull request to be merged: web-platform-tests dashboard.

Web Audio Worklets

Maybe the most remarkable new feature concerning Web Audio is the implementation on Web Audio Worklets that Chrome finally introduced at the end of 2017. Roughly it means that user supplied scripts that will handle the audio will be running in same thread that Web Audio API, separate from UI rendering API. Ensuring more glitch-free communication between those two threads.

Combine that with Web Assembly, which allows communicating with those native modules, and we are living exciting times indeed.

Workshops

The ability to use actual audio plugins (the real hardcore stuff you use in your DAW (Digital Audio Workstation) software), in your browser with a few lines of javascript feels amazing. Of course if you are really not into this audio thingy, this is all just a jargon to you. But trust me, it does.

I participated in two workshops about web audio plugins and modules. Most likely I won’t be trying to code these myself but it was very interesting to see the tools and get the glimpse of the process.

Conclude somehow. Don’t panic.

So, it may be a niche. But for this passionate bunch of people it is more. And what a bunch of nice, friendly people this community is. This band of brothers and sisters. Who may not necessarily really know how to play anything but still yearn to create something audio-wise.

All the people who gave a talk or demo or installation. Whether I mentioned you or not in my silly little blog, hats off to you. You all are way ahead of me.

If you work or tinker around with web audio, you should absolutely join the slack group , where you can get answers for technical issues from people who literally know the core of the API.

Videos of presentation and concert are available in youtube here.

Next one, the fifth (almost) annual Web Audio conference will be in Trondheim, Norway at December 2019. Maybe see you there.